This NOTE is a technical overview and introduction to the Platform for Privacy Preferences (P3P), to be submitted for publication in the Communications of the ACM. While the NOTE's topic is the W3C's P3P Activity, it is not a direct result of a W3C Interest or Working Group and its publication indicates no endorsement of its content by the W3C.

Comments are welcome and should be addressed to the authors above.

Copyright © 1998 Lorrie Cranor and Joseph Reagle. All Rights

Reserved.

Distribution policies are governed by the W3C intellectual property

terms.

The World Wide Web Consortium (W3C)’s Platform for Privacy Preferences Project (P3P) provides a platform for trusted and informed online interactions. The goal of P3P is to enable users to exercise preferences about Web sites' privacy practices. P3P applications will allow users to be informed about Web site practices, delegate decisions to their computer agent when they wish, and tailor relationships with specific sites. We believe that users' confidence will increase when presented with meaningful choices about services and their privacy practices.

P3P is not a silver bullet; it is complemented by other technologies as well as regulatory and self-regulatory approaches to privacy. Some technologies have the ability to technically preclude practices that may be unacceptable to a user. For example, digital cash, anonymizers, and encryption limit the information that the recipient or eavesdroppers can collect during an interaction. Furthermore, laws and industry guidelines codify and enforce expectations regarding information practices as the default or baseline for interactions.

A compelling feature of P3P is that localized decision making enables flexibility in a medium that encompasses diverse preferences, cultural norms, and regulatory jurisdictions. However, for P3P to be effective, users must be willing and able to make meaningful decisions when presented with disclosures. In light of concerns about overloading users, P3P assists users by allowing them to delegate much of the information processing and decision-making to their computer agents when they wish. It is our contention that this approach is efficacious, especially when used in conjunction with complementary technical and social mechanisms.

P3P is a project of the W3C, an international industry consortium that develops common protocols to promote the evolution of the World Wide Web and ensure its interoperability. The development of P3P has been a consensus process involving representatives from over a dozen W3C member companies and organizations, as well as invited experts from around the world.

P3P is designed to help users reach informed agreements with services (Web sites and applications that declare privacy practices and make data requests). As the first step towards reaching an agreement, a service sends a machine-readable P3P proposal, shown in Figure 1, in which the organization responsible for the service declares its identity and privacy practices. A proposal applies to a specific realm, identified by a URI or set of URIs. It includes statements about what data are collected (some of which may be optional), the purpose of the collection, with whom data may be shared, and whether data will be used in an identifiable manner. The P3P proposal may also contain other information including the name of an assuring party and disclosures related to data access and retention.

Proposals can be automatically parsed by user agents such as Web browsers and compared with privacy preferences set by the user. If there is a match between service practices and user preferences, a P3P agreement is reached. Users should be able to configure their agents to reach agreement with, and proceed seamlessly to, services that have certain types of practices; users should also be able to receive prompts or leave when encountering services that engage in potentially objectionable practices. Thus, users need not read the privacy policies at every Web site they visit to be assured that information exchanged (if any) is going to be appropriately used .

Some P3P implementations will likely support a data repository where users can store information they are willing to release to certain services. If they reach an agreement that allows the collection of specific data elements, such information can be transferred automatically from the repository. Services may also request to store data in the user's repository. These read and write requests are governed by the P3P agreement reached with the user. In addition, the repository can be used to store site-specific IDs that allow for pseudonymous interactions with Web sites that are governed by a P3P agreement.

At a high level, P3P can be viewed simply as a protocol for exchanging structured data. An extension mechanism of HTTP1.1 [1] is used to transport information (proposals and data elements) between a client and service. At a more detailed level, P3P is a specification of syntax and semantics for describing both information practices and data elements. The specification uses XML [2] and RDF [3] to capture the syntax, structure, and semantics of the information. (XML is a language for creating elements used to structure data; RDF provides a restricted data model for structuring data such that it can be used for describing Web resources and their relations to other resources.) P3P was designed such that applications can be efficiently implemented as part of a wide variety of clients and services including those with limited data storage facilities and those with high communications latencies.

In the following sections we describe the process of reaching an agreement,

how P3P can be used to facilitate pseudonymous interactions, the role of

the P3P user data repository, and the P3P user experience. We conclude with

a discussion of P3P's relationship to larger privacy questions.

CoolCatalog, a member of TrustUs, makes the following statement for the Web pages at http://www.CoolCatalog.com/catalogue/. Cool Catalog is accountable to http://www.GoodPrivacy.org.

We collect clickstream data in our HTTP logs. We also collect your first name, age, and gender to customize our catalog pages for the type of clothing you are likely to be interested in and for our own research and product development. We do not use this information in a personally-identifiable way. We do not redistribute any of this information outside of our organization. We do not provide access capabilities to information we may have from you, but we do have retention and opt-out policies, which you can read about at our privacy page http://www.CoolCatalog.com/PrivacyPractice.html.

<PROP realm="http://www.CoolCatalog.com/catalogue/" entity="CoolCatalog" agreeID="94df1293a3e519bb" assurance="http://www.GoodPrivacy.org"> <USES> <STATEMENT purpose="1" recipient="0" id="0"> <REF name="Web.Abstract.ClientClickStream"/> </STATEMENT></USES> <USES> <STATEMENT purpose="2,3" recipient="0" id="0" consequence="a site with clothes you'd appreciate."> <WITH><PREFIX name="User."> <REF name="Name.First"/> <REF name="Bdate.Year" OPTIONAL="1"/> <REF name="Gender"/> </PREFIX></WITH> </STATEMENT></USES> <DISCLOSURE discURI="http://www.CoolCatalog.com/PrivacyPractice.html" access="3" other="0,1"/> </PROPOSAL>

The concept of an agreement in P3P is more than a facsimile of a real world hand shake between two parties. Agreements bind the data solicitation to privacy practices, act as a useful short-hand for future data requests, and clearly represent which practices are in effect for any realm (URI or set of URIs). An agreement is always stated from the point of view of the service and contains identifying information for the service, but it may be created by the user and sent to the service for approval. The P3P proposal should cover all relevant data elements and practices: if a service wants to collect a data element, it must be mentioned in the proposal.

Proposals are encoded in XML according to the RDF data model. The P3P Syntax Specification [4] specifies an RDF application that defines the structure, or grammar, of a P3P proposal. The harmonized vocabulary [5] is an XML/RDF schema that specifies specific terms that fit into the P3P grammar. For example, the P3P grammar specifies that P3P statements must declare the purposes for which data are collected, while the harmonized vocabulary specifies a list of six specific purposes. P3P can support multiple schemas. However, P3P is likely to be most effective when a single base vocabulary is widely used since information practice statements are most useful when they can be readily understood by users and their computer agents. Complementary vocabularies may develop to cater to jurisdiction-specific concerns not addressed by the base vocabulary. This can be easily accomplished through the XML-namespace [6] facility, which allows tags from different XML schemas to be intermixed.

Figure 1 provides an example privacy proposal in both English and P3P syntax. Notice that this privacy proposal enumerates the data elements that the service proposes to collect and explains how each will be used. It also identifies the service and its assuring party. The assuring party is the organization that attests that the service will abide by its proposal. Assurance may come from the service provider or an independent assuring party that may take legal or other punitive action against the service provider if it violates an agreement. TRUSTe (discused elsewhere in this issue) is one such organization that has provided Web site privacy assurances, even before the development of P3P. In addition to accountability to an assuring party, services may also be held accountable to industry guidelines and laws. In the United States, for example, service providers that misrepresent their privacy practices in a P3P proposal might be held accountable under fraud and deceptive practices laws.

See the sidebar for a more detailed explanation of the proposal syntax.

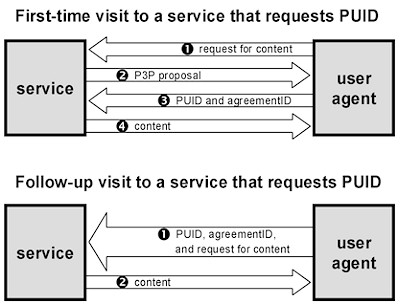

P3P interactions generally begin when a user agent contacts a service, and the service sends a proposal. The user agent considers the proposal and the user’s preferences. If the proposal and preferences are inconsistent, the agent may prompt the user, reject the proposal, send the service an alternative proposal, or ask the service to send another proposal. Sometimes the service may send multiple proposals at once, in which case the agent may analyze all of them to determine which are acceptable. Proposals may include a set of consequences that can be shown to a user to explain why a proposed practice may be valuable to the user in a particular instance, even if the user would not normally allow that practice. Once a user agent determines that a proposal is acceptable, it may accept the proposal by returning a fingerprint of the proposal, called the agreementID. User agents with sufficient storage space should record the agreements they reach and index them by agreementID.

Once an agreement is reached the user agent sends to the service any enumerated data elements that are included in the agreed-to proposal. These may be obtained from the user’s data repository or by prompting the user. Of course, agreements may be reached in which no data is collected.

Rather than sending a new proposal to the user agent on every contact, a service may send the agreementID of an existing agreement. This is 1) asserting that the service and the user agent have already agreed to a proposal, 2) signaling which proposal and privacy practices it is operating under, and 3) requesting those data elements referenced in the agreement. The user agent may turn away, respond with the requested data, or request a full proposal (if it has no record of such an agreement or it desires a new one).

It should be noted that user agents may come in a wide variety of forms. They may be implemented as Web browsers, browser plug-ins, proxy servers, or services provided by ISPs. They may sit on a user's personal computer or somewhere else on the Internet. And they may be implemented with or without user data repositories. Regardless of how they are implemented, they should act only on behalf of their users.

While many commercial Web sites are eager to maintain persistent relationships with users, most do not require personally-identifiable information. For those that simply wish to track the number of unique visitors to their sites, customize pages for repeat visitors, or serve advertising tailored to each visitor’s interests, anonymous and pseudonymous relationships work well.

Currently, many Web sites use HTTP cookies to develop relationships with visitors. By accepting a cookie containing a unique identifier from a Web site, users can identify themselves to the site when they return. However, the current HTTP cookie protocol provides minimal information to users, and cookie implementations in popular Web browsers don’t make it easy for users to control which sites to accept cookies from -- though this may change [7,8]. P3P includes two identifiers that users can exchange with services in place of cookies. The pairwise or site ID (PUID) is unique to every agreement the agent reaches with the service. If a user agrees to the use of a PUID, it will return the PUID and agreementID to URIs specified in the agreement's realm, as shown in Figure 2. The temporary or session ID (TUID) is used only for maintaining state during a single session. If a user returns to a site during another online session, a new TUID will be generated. PUID and TUIDs are solicited as part of an agreement and consequently have disclosures relevant to the purpose, recipients, and the identifiable use associated with them.

While users can enter into agreements with services in which they agree to

provide a PUID or TUID and no personally identifying data, their interactions

may still be traceable to them, perhaps through their IP address. Users who

are concerned about this can use P3P in conjunction with an anonymizing service

or tool such as the Anonymizer, LPWA, Onion Routing, or Crowds, which are

described in other articles in this issue.

While anonymous browsing may account for many Web interactions, there will be times where services solicit information from a user. Often such information is necessary in order to complete a transaction that a user has initiated. For example, services may require payment information to bill a user for a purchase or shipping information to deliver a product to the user through the postal mail. The P3P user data repository can store such information that users wish to share with services with which an agreement has been reached.

The P3P user data repository provides benefits for both users and services. Users benefit from not having to retype or keep track of data elements that may be requested multiple times. Services benefit from receiving consistent data from users each time they return to a Web site. There are also privacy benefits associated with coupling data solicitation and exchange with the P3P mechanism for disclosing privacy practices. Services may retrieve data from a user’s repository as needed rather than storing it in a central database, thus giving users more control over their information. In addition this coupling reduces ambiguities. Rather than promoting general disclosures over types of information collected -- which may be poorly characterized -- the disclosures apply directly to the information solicited.

It will be important that implementers take care to implement data repositories in such a way that a user's data is protected from rogue applets, viruses, and other processes that might try to gain unauthorized access to this data.

P3P defines a base set of commonly requested data elements with which all P3P user agents should be familiar. These elements include the user’s name, birth date, postal address, phone number, email address, and similar information. Each element is identified by a standard name and assigned a data format. Whenever possible, the elements have been assigned formats consistent with other standards in use on the Internet, for example vCard [9]. Both individual elements and sets of elements may be requested. For example, a service might request an entire birth date or just a year of birth. By standardizing these elements, we hope to reduce accidental -- or purposeful -- user confusion resulting from requests by different services for the same data element but under different names.

User agent implementations may allow users to pre-populate their repositories with data. Or, user agents may prompt users to enter data when it is requested and save it to the repository automatically for future requests. Note, that even when a data element is already stored in a repository, user agents may not send it to a service without the user’s consent. Information is sent only sent after an agreement has been reached.

In addition to frequently requested data elements, the base set also includes the PUID and TUID elements and a set of abstract elements that do not have static values stored in the repository. Rather, abstract elements represent information exchanged in the course of an HTTP interaction. For instance, the abstract elements include both client and server click stream data, server-stored negotiation history, and form data. The abstract form element is used to indicate that a service proposes to collect data through an HTML form rather than through P3P mechanisms, thus giving the service greater control over the presentation of user prompts. The service can use a standard set of categories defined in the harmonized vocabulary to describe the type of information to be collected through the form. For example, if the service wishes to give the user an opinion survey, rather than enumerating all of the questions on the survey it might simply declare that it is collecting form data of the "preference data" category and explain the purposes for which the data will be used. Furthermore, the form element can be used to signal user agents to look for elements in the data repository that match the fields in a form a service presents to the user. Some user agents may be able to automatically fill-in these fields with data from the repository.

Because many services are likely to want to collect information not contained in the base set, P3P includes a mechanism (again, derived from XML namespaces [6]) for services to declare their own sets of data elements and to request that users add them to their repositories.

Some user agents might also allow users to specify multiple personae and associate a different set of data element values with each persona. Thus users might specify different work and home personae, specify different personae for different kinds of transactions, and even make up a set of completely fictitious personae. By storing the data values that correspond to each persona in their repository, users will not have to keep track of which values go with each persona to maintain persistent relationships with services. A system similar to LPWA (described elsewhere in this issue) might be used to automatically generate pseudononymous personae with corresponding email addresses and other information.

If P3P is to prove valuable to users, implementations must be user friendly. The P3P vocabulary provides a level of granularity that everyone may not wish to initially configure. However, because people have varying sensitivity towards privacy, we cannot afford to reduce the amount of information expressed to all users to the granularity that is desired by the "lowest common denominator" -- those who want the least information. Consequently, well-designed abstractions and layered interfaces -- by which users initially choose from a small number of basic settings, and then if they desire, access advanced interfaces -- are critical to the success of P3P [10].

Since users may not be comfortable with default settings developed by a software company, they might prefer to select a configuration developed by a trusted organization, their system administrator, or a friend. Thus P3P includes a mechanism for exchanging recommended settings. These "canned" configuration files are expressed by APPEL, A P3P Preference Exchange Language [11]. Rather than manually configuring a user agent, an unsophisticated user can select a trusted source from which to obtain a recommended setting. These are the settings the user agent will use when browsing the Web on behalf of its user.

Furthermore, all configuration need not happen the first time someone uses P3P. Users should not have to configure all settings upon installation. Instead, users can use a recommended setting to configure the most basic preferences. Afterwards, users can "grow their trust relationships" over time by accruing agreements with the services they frequent.

The P3P specification does not address or place requirements on the user interface. Although good user interfaces are very important, they need not be standardized. Indeed, we would like to encourage creativity and innovation in P3P user interfaces and not place unnecessary restrictions on them. At the same time, there are certain principles that we hope implementers will keep in mind so that their implementations, including their user interfaces, further the goals of P3P; these principle are captured in the P3P Guiding Principles NOTE [12].

The ability to offer explicit agreements on the basis of specific privacy disclosures is a compelling method of addressing policy concerns in the context of a decentralized and global medium. P3P applications will aid users' decision-making based on such disclosures. Consequently, P3P will likely be one of the first applications that enables trust relationships to be created and managed by the majority of Web users. However, these interactions must happen in a comprehensible, almost intuitive way -- akin to how we make sophisticated (but taken-for-granted) decisions in real life. Fortunately, P3P supports two mechanisms that can make this possible. First, it enables users to rely upon the trusted opinions of others through the identity of the service and assurance party, as well as through recommended settings (APPEL). Second, trust relationships can be interactively established over time, just as they are in the real world.

P3P is designed to accommodate the balance desired by individuals, markets, and governments with respect to information exchange, data protection, and privacy. However, this type of solution must be cast in a context cognizant of its primary assumption: decentralized, agent-assisted decision-making tools allow users to make meaningful decisions. P3P's success will be determined by how well users' feel their privacy expectations are being met when using P3P. This is dependent on the quality of the implementations, the abilities of users, and the presence of a framework that promotes the use and integrity of disclosures. With good implementations, users of P3P should be confident that they will interact only with services whose stated practices match their preferences. However, P3P only prevents the disclosure of information to services with whom the user has not reached an agreement; it does not guarantee that information will be used in the agreed-to manner. External enforcement mechanisms may be necessary to give users confidence that services accurately state their practices and abide by their agreements.

Furthermore, other external factors will have a significant impact on how technologies like P3P fare as they are implemented and adopted. Will expectations of higher-levels of privacy than are currently offered force a change in market practices? Will the tenacity of practices require people to modify their expectations? Or in practice, do people actually care about privacy to the degree that recent surveys would have us believe? The answers to these questions will not be determined by technology alone.

The proposal elements described below are used to convey a service's information practices. Numbers in parenthesese indicate the codes used to represent each option in a P3P proposal.

The following proposal elements make assertions that apply to an entire proposal.

Realm - The Unifrom Resource Identifier (URI) or set of URIs covered by the proposal.

Disclosure URI - The URI of a service's human-readable privacy policy. This policy must include contact information for the service provider.

Access to Identifiable Information - The ability of the individual to view personally-identifiable information and address questions or concerns to the service provider. Services's may disclose one or more of the following access categories and provide a human-readable statement about their access practices at the disclosure URI:

Assurance (accountability) - An assuring party that attests that the service will abide by its proposal, asserts that the service follows guidelines in the processing of data, or makes other relevant assertions. Assurance may come from the service provider or an independent assuring party.

Other Disclosures - Services may indicate that they make either of the following additional disclosures as part of their human-readable policy:

The following proposal elements make assertions that apply to a set of data elements or data categories. Some may be applied to an entire proposal as well.

Consequence - A human-readable description of the benefits or other results of agreeing to a proposal.

Data category - A quality of a data element or class that may be used by the user's agent to determine what type of element is under discussion. The following data categories have been defined in the harmonized vocabulary:

Purpose - The reason a data element is collected. The follwoing purposes have been defined in the harmonized vocabulary:

Identifiable Use - A declaration as to whether data is used in a way that is personally identifiable -- including linking it with identifiable information obtained from other sources. While some data is obviously identifiable (such as a person's full name), other data (such as zip code, salary, birth date) could allow a person to be identified, depending on how it is used.

Recipients - An organizational area, or domain, beyond the service provider and its agents where data may be distributed. The following recipients choices are provided in the harmonized vocabulary: